After four weeks off with the lurgy (once again I know, I know I am on an NHS waiting list ….) and then Christmas holidays, family visit and loads of rest, I needed to get my brain back in gear. Enter a JISC Mail discussion about hallucinated citations or hallucinated references when using AI. Apparently there is nothing out there that is either trustworthy or affordable.

So I figured I can try and build something? Right? What can go wrong? Well besides my laptop running too hot. And don’t get me started on deployment. But with the help of AI (Gemini Pro using its API) I managed to come up with something that identifies hallucinated references, even references that Gemini Pro has hallucinated itself.

Test

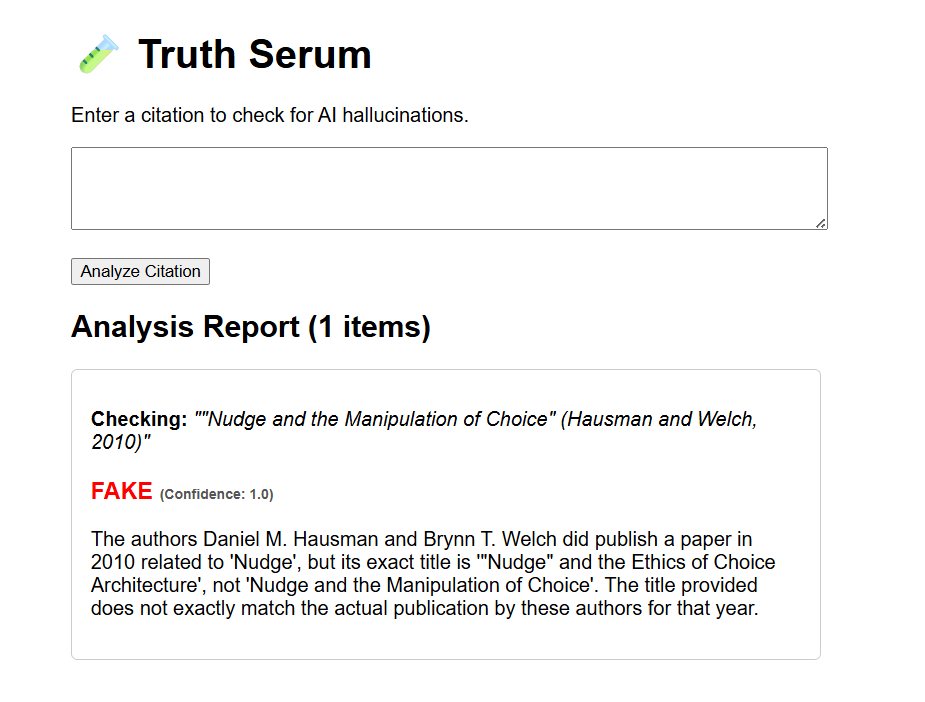

Gemini in a result suggested this reference:

Paper: “Nudge and the Manipulation of Choice” (Hausman & Welch, 2010)

The test I ran via the Gemini Pro API based program said:

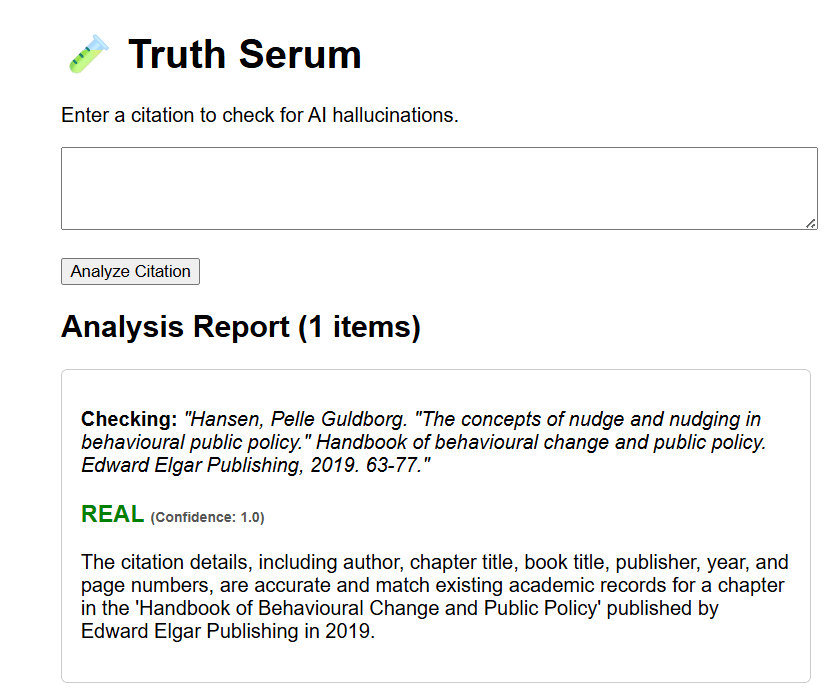

An accurate citation results like this

Try yourself

The Render based website you can use, keep in mind I am using free versions so I recommend not checking more than 20 resources at one time. ENTER between each resource.

https://truth-serum-nathalietasler.onrender.com/

- you can also use the RubyGem

- https://rubygems.org/gems/truth_serum_academic

- or check Github

- https://github.com/DrNTasler/truth_serum_academic

if this is too much hassle you can use this Gem instead

https://gemini.google.com/gem/1ZiYr3pn5f10Oc8Nw6Qz5t2osd7e43hhc?usp=sharing

PS let me know if the Render website has an issue, I had to update the code and the API it used but I think all the bugs are now fixed.

I’ve seen this problem firsthand. Tools like this are useful because AI sounding confident is exactly what makes hallucinated citations dangerous. Verification still matters.

LikeLiked by 1 person

This is probably one of the most useful things I’ve seen for academic work. Thanks for sharing!

LikeLiked by 1 person